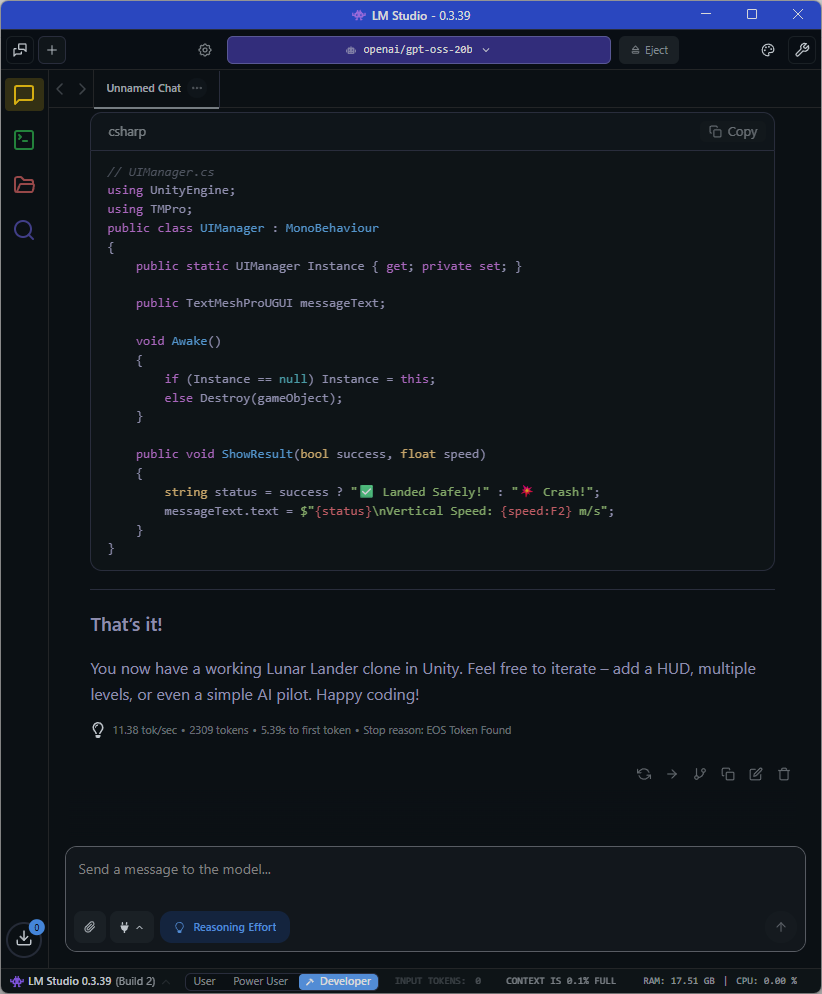

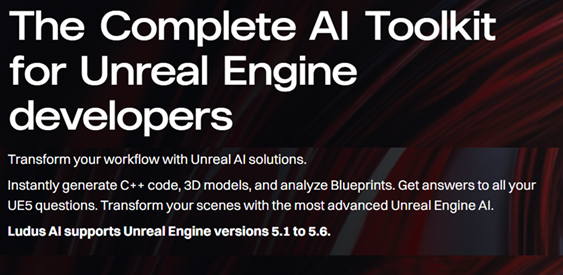

In preparation for my presentation for my July 2025 CGDC presentation on AI Assisted Game Development Tools, I spent a lot of time beta testing the latest beta version of the Ludus AI Blueprints Toolkit for the Unreal Game Engine using Unreal 5.6.

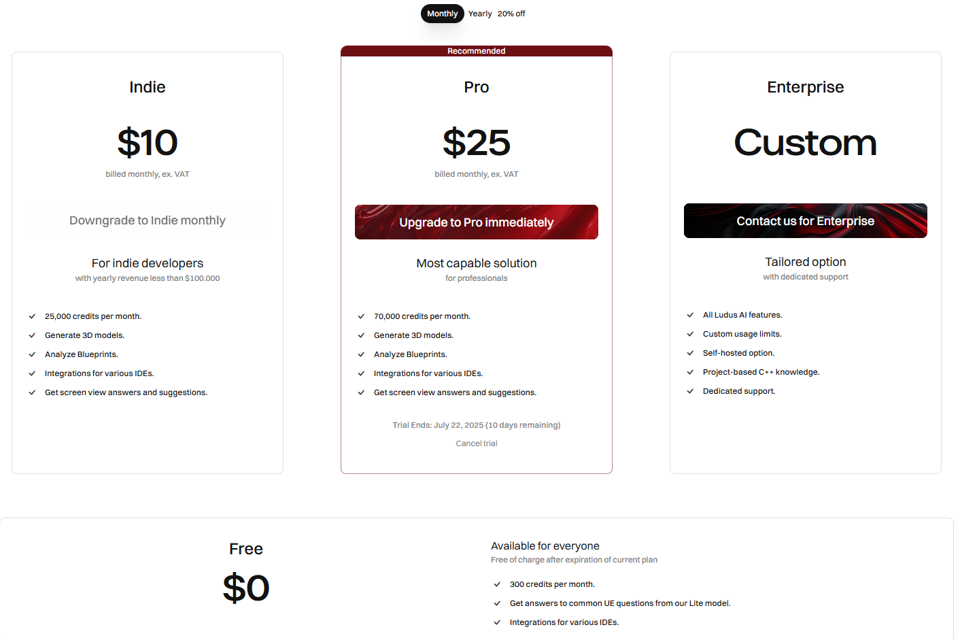

I did my Blueprints testing using their 14 Day free Pro Subscription Trial with 20,00 credits. They also provided additional testing credits for the closed beta period to help with the closed beta, which I really appreciated!

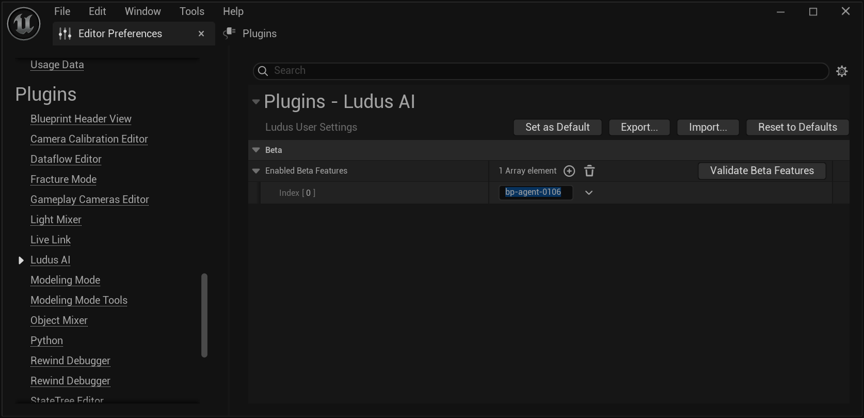

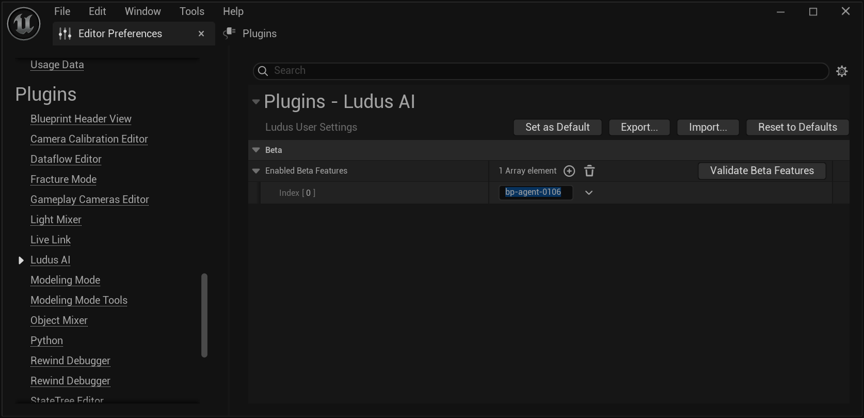

I was particularly focused on their new AI Agent Blueprint Generation Features. They currently provide support for: Actor, Pawn, Game Mode, Level Blueprint, Editor Utility Blueprints & Function Library Blueprints. They also offer partial support for Widget (UMG). They are planning to provide support for Material, Niagara, Behaviour Tree, Control Rig, and MetaSound in the near future. Their goal is to fully integrate Blueprint Generation into the main plugin interface. Once the Open Beta phase concludes, activation via a special beta code will no longer be necessary.

For my beta testing, I asked the Ludus AI agent to identify all the load issues I was getting after upgrading an old Kidware Software Unreal Engine 4.26 RPG academic game project to the latest version of Unreal 5.6. I asked the new Ludus AI Blueprints agent to identify and fix the load issues I was getting after I updated the old project from Unreal 4.26 to 5.6.

The Ludus AI Agent examined my updated Unreal Engine 5.6 project and determined which Blueprints were broken and it gave me a systematic plan to fix them. I then asked Ludus AI (in Agent mode) to apply all the recommended fixes and test them for me. You can watch the 5-minute video below showing the Ludus AI agent in action:

In Agent mode, Ludus AI made all the fixes (without my help) and my RPG game project worked flawlessly using Unreal Engine 5.6. I was genuinely impressed! Below is the video I took of the fixed gameplay after Ludus AI did its Unreal Engine 5.6 Blueprint repair magic:

Back in 2022, it took me many hours to identify and fix all those Unreal 5 Upgrade errors that I was getting back then doing it all manually myself. The Ludus AI Blueprints beta agent did all the repair work for me in approximately 5 minutes.

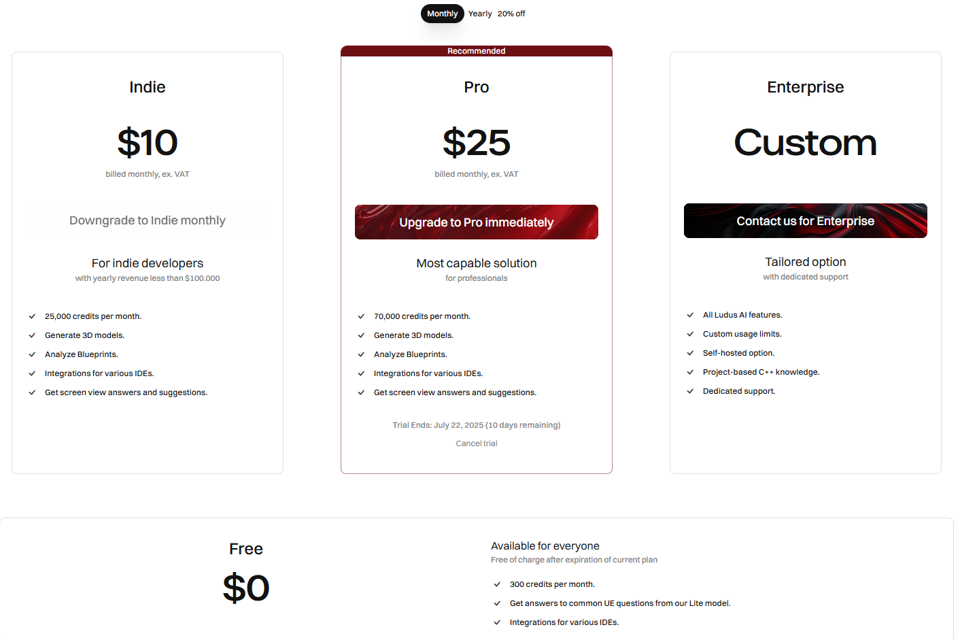

I was so impressed by the Beta Ludus AI Agent Blueprints results; I personally purchased a subscription for the Ludus AI plugin for myself. They offer several tiers of pricing for Indies, Pros and Enterprise customers:

They also offer a Free 400 Credit per month subscription so you can take a look at their plugin.

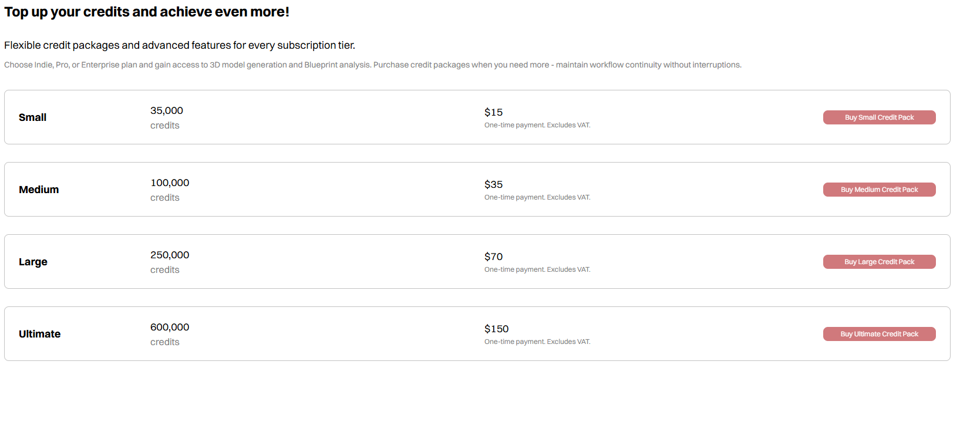

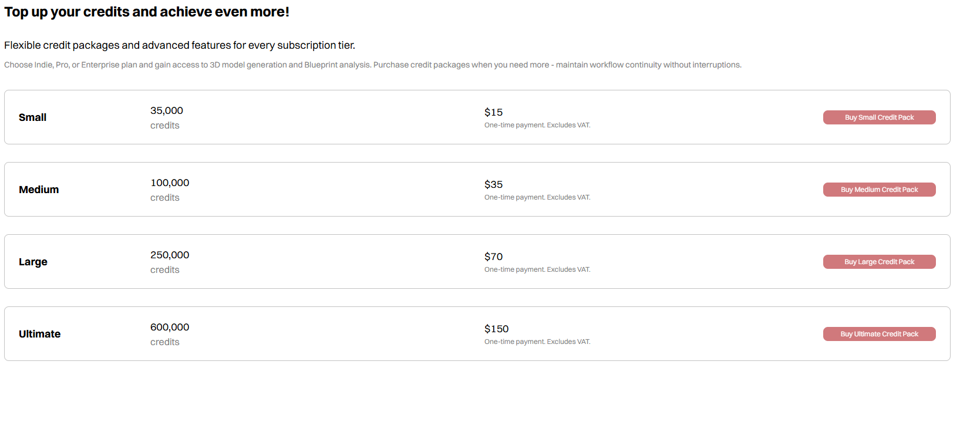

They also sell additional credit packages just in case you burn through your credit allotment during a given month.

The Ludus AI Blueprints 0.6.0 beta agent support moved into “Open Beta” on July 29, 2025, so you can now try out their new AI Blueprint beta features yourself at https://ludusengine.com/ using a Ludus AI Pro 14 Day Free Evaluation Subscription.

I plan to give you another update on my Ludus AI Assisted Unreal Game Engine Blueprint testing in a future blog post.